The TimeCopilot Project, Modern Deep Learning Foundations Book, New Tutorials

A weekly curated update on data science and engineering topics and resources.

Hi folks,

Last week, this newsletter reached its 1st anniversary. What started as an experiment is now updated weekly. I want to thank each of you for your support!

This year, the newsletter gained more than 32,000 subscribers on LinkedIn and Substack, and starting this week, we’re expanding to Medium!

If you’re enjoying this newsletter, here’s how you can support:

♻️ Please share this with others interested in these topics.

If you have a Medium subscription, please read this newsletter on Medium.

If you have a LinkedIn Learning subscription, please check out my courses.

This week's agenda:

Open Source of the Week - The TimeCopilot Project

New learning resources - LLM & RAG evaluation playbook, git course, deploy AI app to Hugging Face Spaces, n8n tutorials, building LLMs from Scratch

Book of the week - Modern Deep Learning Foundations by Dr. Barak Or

I share daily updates on Substack, Facebook, Telegram, WhatsApp, and Viber.

Are you interested in learning how to set up automation using GitHub Actions? If so, please check out my course on LinkedIn Learning:

Open Source of the Week

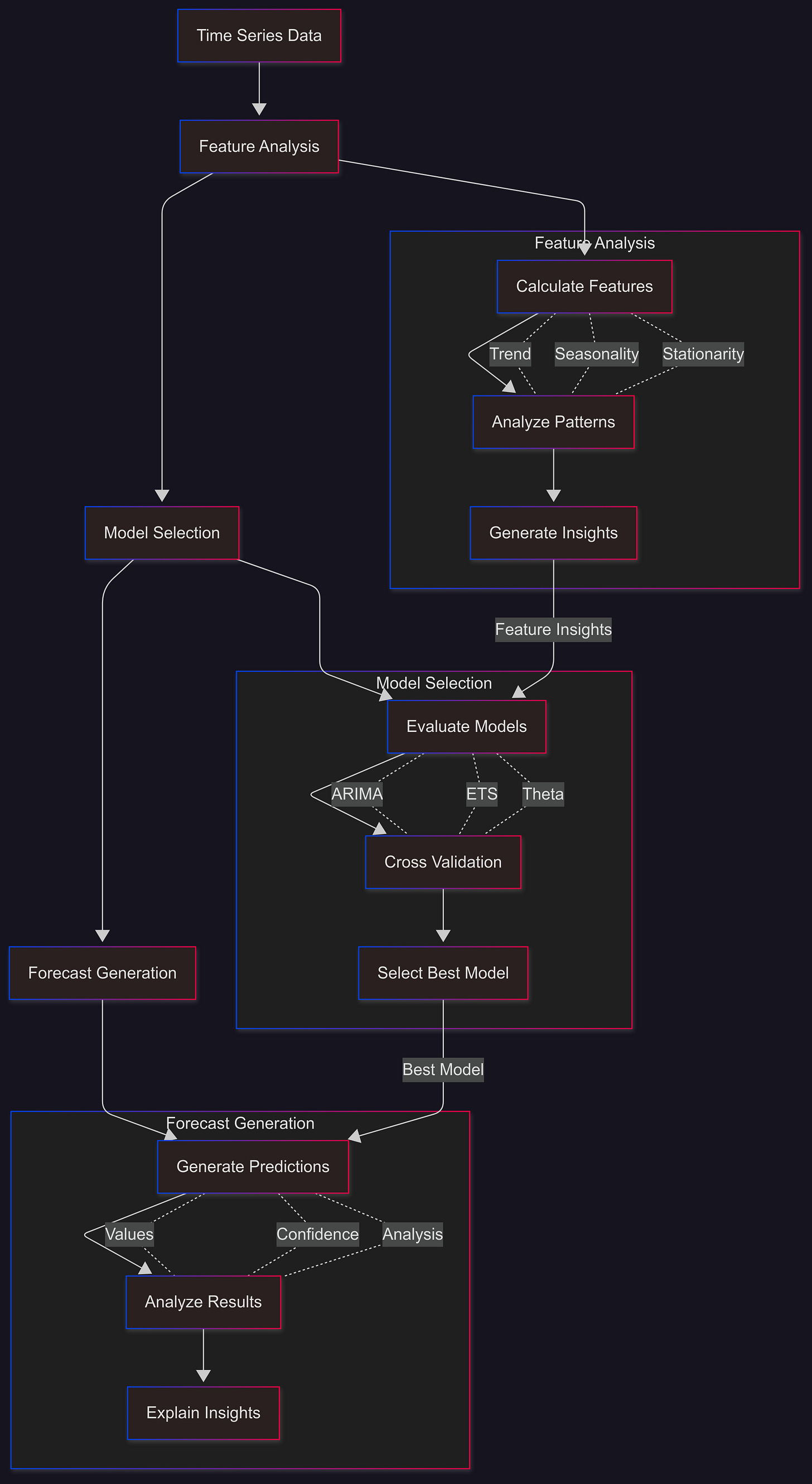

This week’s focus is on the TimeCopilot by Azul Garza - an agentic framework for time series forecasting. As a time series person, this is one of the most interesting projects I have come across recently. This agent framework enables users to interact with time series data using natural language.

Project repo: https://github.com/AzulGarza/TimeCopilot

Project Highlights and Key Features

An agentic framework for working with time series data supporting forecasting and anomaly detection applications

Integration of foundation models such as Nixtla TimeGPT, Amazon Chronos, Google TimesFM, and Salesforce Moirai

Support classical statistical, machine learning, and neural network models

Enable experimentation, testing, and benchmarking a wide range of forecasting models and select the best-performing model

More details are available in the project documentation.

License: MIT

New Learning Resources

Here are some new learning resources that I came across this week.

LLM & RAG Evaluation Playbook for Production Apps

This one-hour workshop by

focuses on evaluating LLM/RAG apps before deploying to production, and it covers the following topics:Add a prompt monitoring layer

Visualize the quality of the embeddings.

Evaluate the context from the retrieval step used for RAG

Compute application-level metrics to expose hallucinations, moderation issues, and performance (using LLM-as-judges)

Log the metrics to a prompt management tool to compare the experiments.

Git Crash Course

This looks like a great “getting started” course for Git, and it covers the foundation of version control with Git. This includes topics such as:

Setup

Creating a new Git repository

Staging files

Committing changes

Deleting and untracking files

Viewing the project history

Deploy AI App to Hugging Face Spaces

The following tutorial by Shaw Talebi provides a step-by-step guide to deploying a Streamlit app to Hugging Face Spaces with Docker.

Getting Started with Self-Hosting n8n

The following tutorial provides an in-depth guide for setting up and deploying automation with n8n.

n8n tutorial

The following n8n tutorial provides a step-by-step guide for self-hosting an n8n server to automate tasks using Docker.

Build an AI Agent

This tutorial by freeCodeCamp contains three workshops focusing on building AI agents:

Workshop 1: Building Voice Agents with LiveKit and Cerebras

Workshop 2: Creating Research Assistants with Exa and Cerebras

Workshop 3: Developing Multi-Agent Workflows with LangChain and Cerebras

Building LLMs from Scratch

This new tutorial from freeCodeCamp provides a complete guide for building a large language model from scratch using only pure PyTorch. The course covers the entire lifecycle of the process from foundational concepts to advanced alignment techniques, and it covers the following topics:

Introduction

Core Transformer Architecture

Training a Tiny LLM

Modernizing the Architecture

Scaling Up

Mixture-of-Experts (MoE)

Supervised Fine-Tuning (SFT)

Reward Modeling

RLHF with PPO

Book of the Week

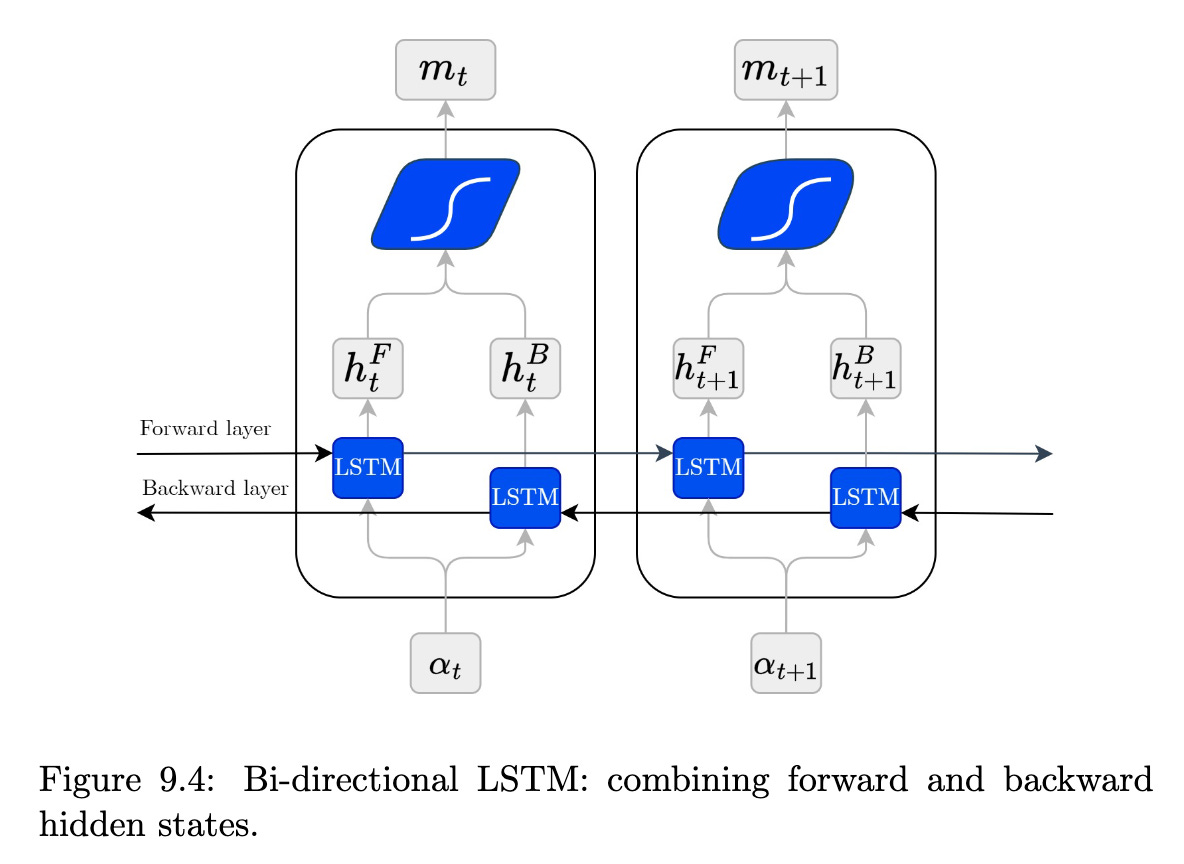

This week’s focus is on a new deep learning book - The Modern Deep Learning Foundations by Dr. Barak Or. The book, as the name implies, focuses on the foundation of deep learning, and it covers the following topics:

Machine Learning vs. Deep Learning – fundamental distinctions

Neurons, layers, activation functions, and FeedForward networks

Backpropagation, loss functions, optimization, and hands-on training

Overfitting, regularization methods, and selecting performance metrics

CNNs and RNNs, dimensionality reduction, Transformers

Industrial infrastructure: Colab, model saving, building APIs with FastAPI

Preparation for further specialization in CV, Tabular Data, Time Series, and LLMs

The book is ideal for software engineers, data scientists, researchers, and developers with a background in Python who are looking to build a strong foundation in deep learning and work with industry-relevant models.

Thanks to the author, the book is openly available online for free on the author’s website.

Have any questions? Please comment below!

See you next Saturday!

Thanks,

Rami